Fine-tuning unlocks powerful customization capabilities for large language models when basic prompting falls short or when you're facing throughput and latency constraints. By fine-tuning, you can optimize for privacy, efficiency, and cost while maintaining complete ownership of your model - without worrying about providers changing their models unexpectedly. If any of the following bullets resonate with you or your team, you are in the right place!

- Proprietary Data 🔒: Incorporate domain-specific knowledge that major AI models don't have access to

- Cost Efficiency 💰: Reduce API expenses by running smaller models locally or in your own infrastructure for well-defined tasks

- Latency/Throughput Gains ⚡: Achieve lower latency and higher throughput with optimized, smaller models that can run on minimal hardware

- Customizability 🛠️: Tailor model behavior, output style, and specialization to your exact needs

These competitive edges are enticing, until you're knee-deep in training scripts at 3 AM, restarting a job because CUDA ran out of memory 99% through training. Fine-tuning requires specialized ML expertise, expensive compute infrastructure, and countless hours debugging training pipelines. Your focus as an AI engineer should be on building great models that power your products, not on infrastructure.

How We Built It

We decided to partner with Baseten to take on the challenge of building an end to end fine-tuning workflow into Oxen.ai, so that you can go from dataset to model in a few clicks. Simply upload your dataset, select your preferences, and let Oxen do the grunt work.

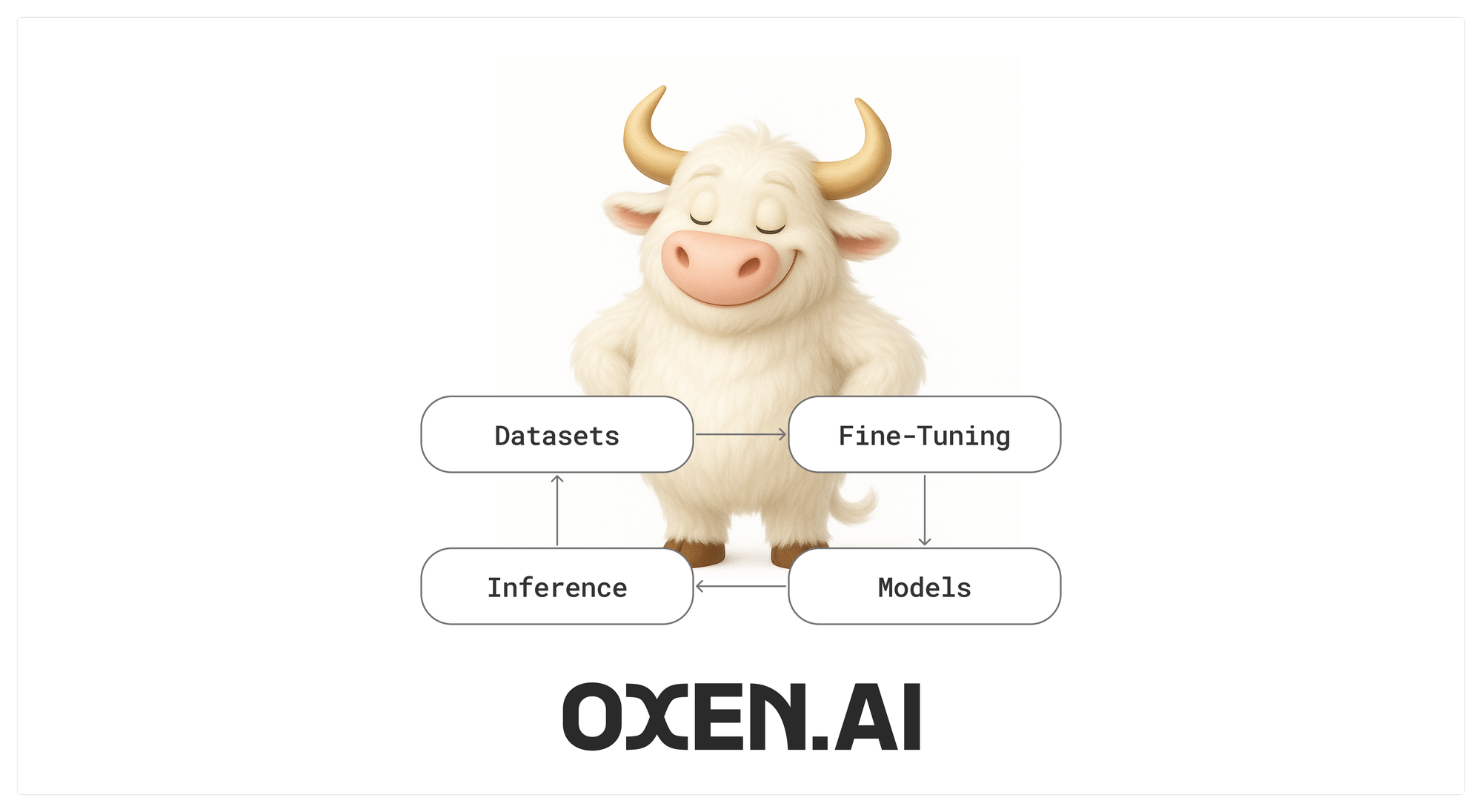

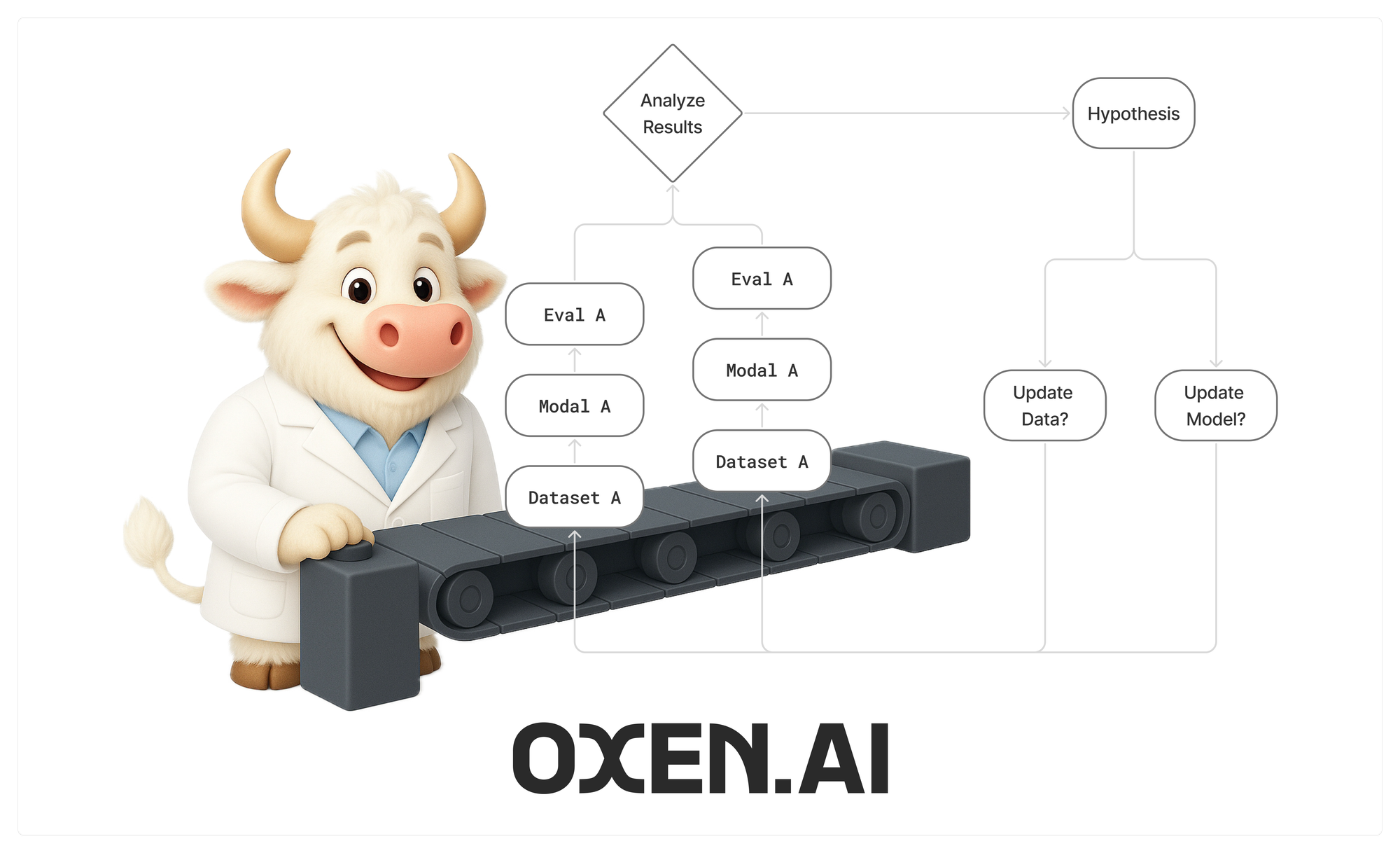

Luckily, Oxen's infrastructure already provided the building blocks for the complete AI development lifecycle - including large asset versioning and orchestration of server-less GPU's for running models. Many developers, including ourselves, had already built custom fine-tune workflows on top of Oxen.ai. All we had to do was connect the pieces together into an integrated experience in the Oxen.ai web hub. This allows anyone to setup a data flywheel improving their model over time.

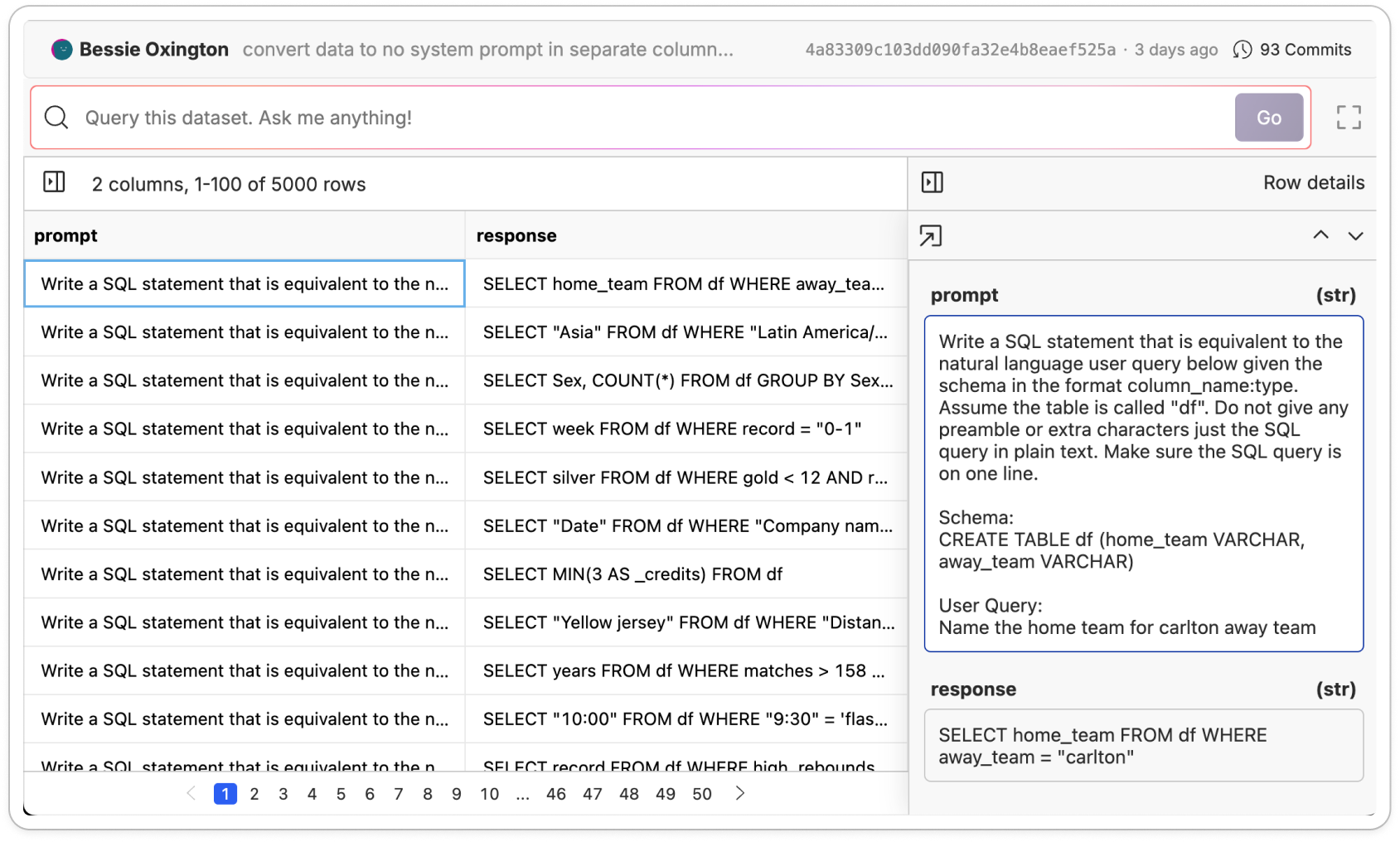

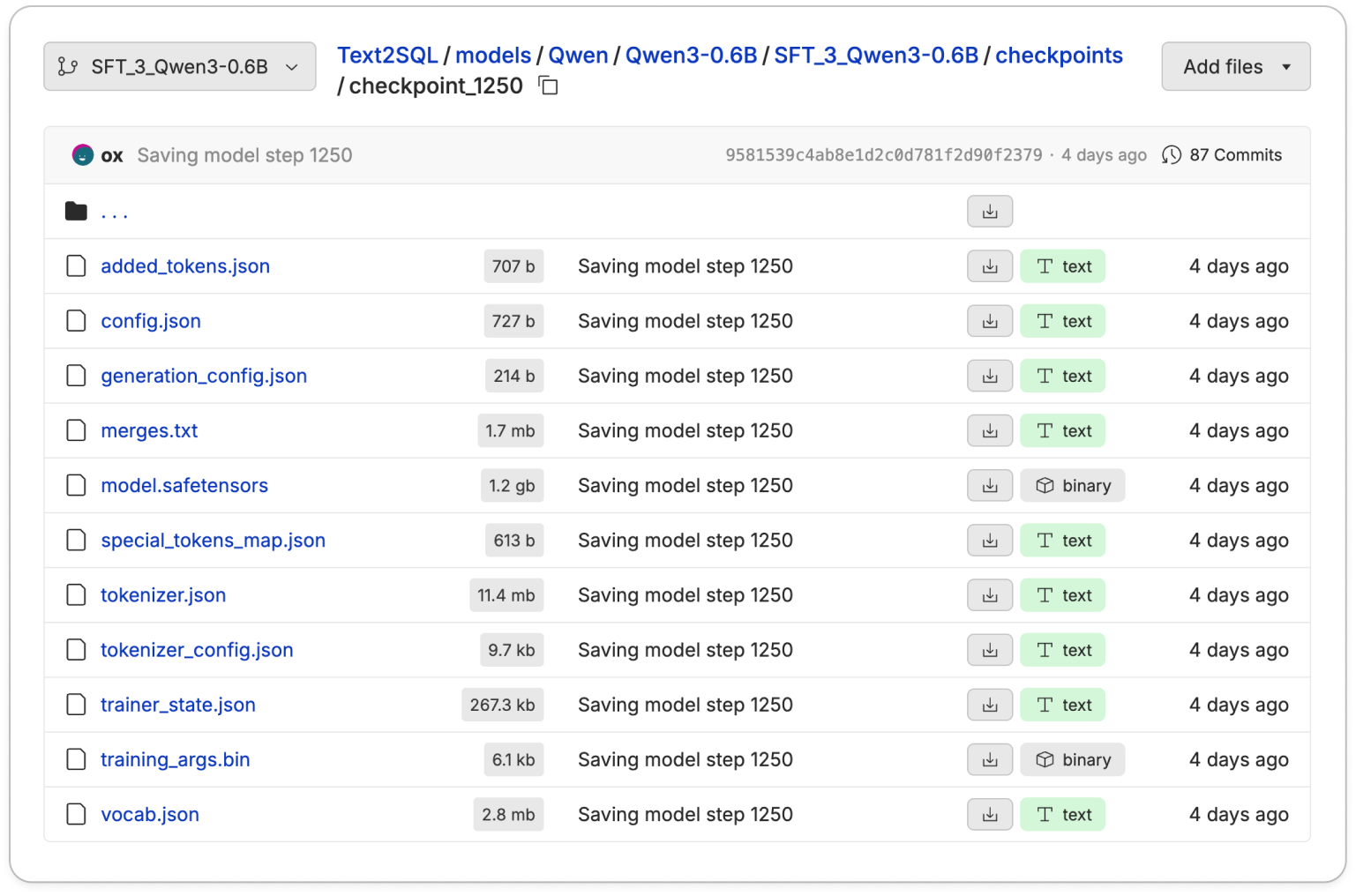

Behind the scenes, we use Oxen's blazing fast version control system as the backbone store model weights and datasets. The underlying fine-tuning code is deployed from notebooks onto GPU clusters provided by Baseten. Each fine-tune gives you access to the raw underlying files so that you can easily connect datasets or models from your own infrastructure. All the inputs and outputs are versioned so that your experiments are reproducible and easy to track.

How To Use It

Fine-tuning on Oxen.ai is as easy as uploading your dataset, selecting a model, and kicking off a job. The dataset can be any of the supported tabular file formats such as csv, tsv, json, or parquet. Select which fields constitute the prompt and response and Oxen will handle the heavy lifting under the hood.

The fine-tuning job is run on BaseTen's distributed training infrastructure and saves the model weights to a branch on an Oxen repository.

If you are concerned about privacy and want a private deployment, let us know! We are happy to work with your team to deploy the end to end infrastructure in your cloud.

Get Early Access

We are releasing fine-tuning as a service to a select set of users to start to get feedback and make sure it fits your needs. If you want early access, email us at hello@oxen.ai or apply on the form below.

Fine-Tuning Fridays

In the meantime, join our new weekly series called "Fine-Tuning Fridays"! Each week we will take an open source model and put it head to head against a closed source foundation model on a specialized task. We will be giving you practical examples with reference code, reference data, model weights, and the end to end infrastructure to reproduce experiments on your own.

The format will be live on Zoom at Friday, 10am PST, similar to our Arxiv Dive series in the past. The recordings will be posted on YouTube and documented on our blog.

Best & Moo,

The Oxen.ai Herd